As cyber practitioners scramble to upskill themselves on the topic of artificial intelligence (AI) security and their organizations quickly adopt AI tools, platforms, applications, and services, various resources are emerging in the industry to help practitioners process the ever-changing landscape.

One of the most useful of those is the Open Worldwide Application Security Project (OWASP) AI Exchange. OWASP has increasingly positioned itself as a go-to resource for AI security knowledge, including publishing the OWASP LLM Top 10 list in 2023, which documents the Top 10 risks for LLM systems and recommendations on how to mitigate those risks.

The OWASP AI Exchange serves as an open-source collaborative effort to progress the development and sharing of global AI security standards, regulations, and knowledge. It covers AI threats, vulnerabilities, and controls.

Here are some of the core aspects covered by the OWASP AI Exchange, including major threats, vulnerabilities, and controls.

AI Exchange Navigator and general recommendations

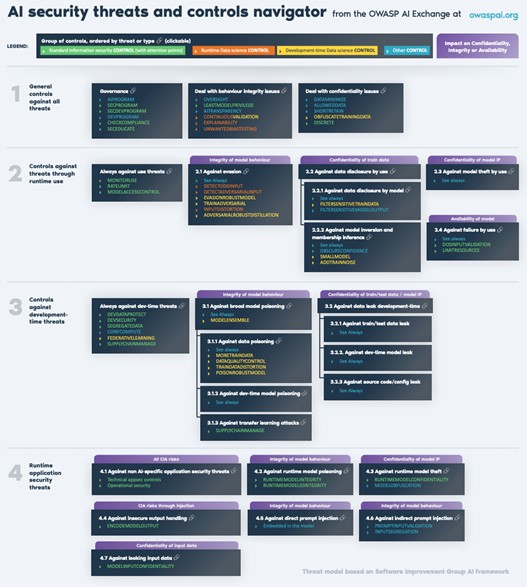

Given the complexity of securing the AI landscape, it is extremely useful to have a resource that allows users to quickly overview various threats and controls and how they relate. The OWASP AI Exchange provides that with its navigator, which covers general controls, controls against runtime threats, controls against development time threats, and runtime application security threats.

OWASP AI security threats and controls manager

OWASP

OWASP AI Exchange also starts off by making some general recommendations. These include activities such as implementing AI governance, extending security and development practices into data science, and adding oversight of AI based on its specific use cases and nuances.

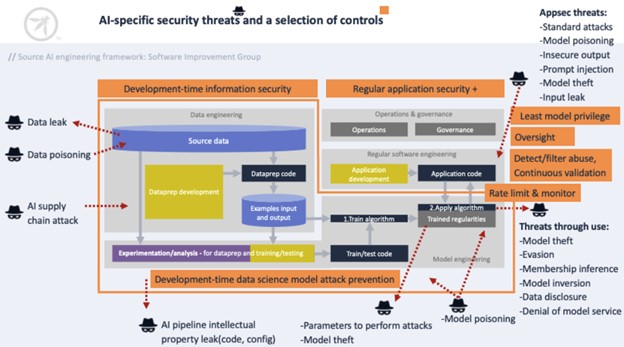

There are many ways in which AI is being leveraged by bad actors and numerous threats have emerged, such as data leaks, poisoning, and even attacks on the AI supply chain. These are captured in the below image from the Exchange.

AI-specific security threats and a selection of controls

OWASP

The Exchange also provides a comprehensive method for organizations to identify relevant threats and accompanying controls. This begins with identifying the threats through activities such as threat modeling, determining responsibility within organizations to address the threats, as well as evaluating external factors such as service providers, software, and suppliers.

From there, organizations are guided to select controls that mitigate the identified threats and cross-reference those controls to existing and emerging standards. Following these steps, the organization will be better positioned to make an informed decision around risk acceptance and ongoing monitoring and maintenance of identified and accepted risks.

Much like other systems, AI system development follows a lifecycle, which is why the AI Exchange recommends activities throughout the phases of the AI software development lifecycle (SDLC). These phases include secure design, development, deployment, and operations and maintenance.

These phases are captured well in other frameworks such as NIST’s Secure Software Development Framework (SSDF), which has phases with accompanying tasks and activities to secure system and software development throughout the SDLC. Given that, let’s take a look at some of the general threats as well as those associated with specific phases of the SDLC, such as development and runtime.

General controls

General controls include activities that are overarching, such as governance. This includes assigning responsibilities for accountability around models and data, as well as risk governance. These efforts are aimed at ensuring that AI initiatives and use aren’t overlooked as part of broader information security management.

Specific general controls include items such as minimizing data and fields that are unnecessary for an application to avoid potential leaks. It also includes ensuring only permitted and authorized data is used in model training activities or in AI systems and platforms. Additionally, data should have a defined lifecycle and not be retained or accessible longer than necessary to minimize risk.

Methods such as tokenization, masking, and encryption can and should be used to protect sensitive information in training datasets and to avoid inadvertent disclosure of sensitive data.

It’s also critical for organizations to implement controls to limit the effects of unwanted behavior, such as impacts on training data, manipulation of AI systems and detecting unwanted behavior and either correcting it or halting the unintended or malicious activities before they can have an impact and notifying respective system maintainers.

Threats through use

Threats through use are defined as those taking place through normal interaction with AI models and systems such as providing input or receiving output. AI Exchange recommends monitoring the use of models and capturing metrics such as input, date, time, and user in logs for incident response.

These may be improper model functioning, suspicious behavior patterns or malicious inputs. Attackers may also make attempts to abuse inputs through frequency, making controls such as rate-limiting APIs. Attackers may also look to impact the integrity of model behavior leading to unwanted model outputs, such as failing fraud detection or making decisions that can have safety and security implications. Recommended controls here include items such as detecting odd or adversarial input and choosing an evasion-robust model design.

Development-time threats

In the context of AI systems, OWASP’s AI Exchange discusses development-time threats in relation to the development environment used for data and model engineering outside of the regular applications development scope. This includes activities such as collecting, storing, and preparing data and models and protecting against attacks such as data leaks, poisoning and supply chain attacks.

Specific controls cited include development data protection and using methods such as encrypting data-at-rest, implementing access control to data, including least privileged access, and implementing operational controls to protect the security and integrity of stored data.

Additional controls include development security for the systems involved, including the people, processes, and technologies involved. This includes implementing controls such as personnel security for developers and protecting source code and configurations of development environments, as well as their endpoints through mechanisms such as virus scanning and vulnerability management, as in traditional application security practices. Compromises of development endpoints could lead to impacts to development environments and associated training data.

The AI Exchange also makes mention of AI and ML bills of material (BOMs) to assist with mitigating supply chain threats. It recommends utilizing MITRE ATLAS’s ML Supply Chain Compromise as a resource to mitigate against provenance and pedigree concerns and also conducting activities such as verifying signatures and utilizing dependency verification tools.

Runtime AppSec threats

The AI Exchange points out that AI systems are ultimately IT systems and can have similar weaknesses and vulnerabilities that aren’t AI-specific but impact the IT systems of which AI is part. These controls are of course addressed by longstanding application security standards and best practices, such as OWASP’s Application Security Verification Standard (ASVS).

That said, AI systems have some unique attack vectors which are addressed as well, such as runtime model poisoning and theft, insecure output handling and direct prompt injection, the latter of which was also cited in the OWASP LLM Top 10, claiming the top spot among the threats/risks listed. This is due to the popularity of GenAI and LLM platforms in the last 12-24 months.

To address some of these AI-specific runtime AppSec threats, the AI Exchange recommends controls such as runtime model and input/output integrity to address model poisoning. For runtime model theft, controls such as runtime model confidentiality (e.g. access control, encryption) and model obfuscation — making it difficult for attackers to understand the model in a deployed environment and extract insights to fuel their attacks.

To address insecure output handling, recommended controls include encoding model output to avoid traditional injection attacks.

Prompt injection attacks can be particularly nefarious for LLM systems, aiming to craft inputs to cause the LLM to unknowingly execute attackers’ objectives either via direct or indirect prompt injections. These methods can be used to get the LLM to disclose sensitive data such as personal data and intellectual property. To deal with direct prompt injection, again the OWASP LLM Top 10 is cited, and key recommendations to prevent its occurrence include enforcing privileged control for LLM access to backend systems, segregating external content from user prompts and establishing trust boundaries between the LLM and external sources.

Lastly, the AI Exchange discusses the risk of leaking sensitive input data at runtime. Think GenAI prompts being disclosed to a party they shouldn’t be, such as through an attacker-in-the-middle scenario. The GenAI prompts may contain sensitive data, such as company secrets or personal information that attackers may want to capture. Controls here include protecting the transport and storage of model parameters through techniques such as access control, encryption and minimizing the retention of ingested prompts.

Community collaboration on AI is key to ensuring security

As the industry continues the journey toward the adoption and exploration of AI capabilities, it is critical that the security community continue to learn how to secure AI systems and their use. This includes internally developed applications and systems with AI capabilities as well as organizational interaction with external AI platforms and vendors as well.

The OWASP AI Exchange is an excellent open resource for practitioners to dig into to better understand both the risks and potential attack vectors as well as recommended controls and mitigations to address AI-specific risks. As OWASP AI Exchange pioneer and AI security leader Rob van der Veer stated recently, a big part of AI security is the work of data scientists and AI security standards and guidelines such as the AI Exchange can help.

Security professionals should primarily focus on the blue and green controls listed in the OWASP AI Exchange navigator, which includes often incorporating longstanding AppSec and cybersecurity controls and techniques into systems utilizing AI.