Cybersecurity leaders have been scrambling to keep pace with their organizations’ rapid exploration, adoption, and use of large language models (LLMs) and generative AI. Companies such as OpenAI, Anthropic, Google, and Microsoft have seen exponential growth in the use of their generative AI and LLM offerings.

Open-source alternatives have also seen significant growth, with AI communities such as Hugging Face becoming widely used, offering models, datasets, and applications to the technology community. Fortunately, as the use of generative AI and LLMs has evolved, so has the guidance from industry-leading organizations such as OWASP, OpenSSF, CISA, and others.

OWASP notably has been critical in providing key resources, such as their OWASP AI Exchange, AI Security and Privacy Guide and LLM Top 10. Recently, the group released its “LLM AI Cybersecurity & Governance Checklist,” which provides much-needed guidance on keeping up with AI developments.

OWASP draws distinction among AI types

The checklist offers distinctions between broader AI and ML and generative AI and LLMs. generative AI is defined as a type of machine learning that focuses on creating new data, while LLMs are a type of AI model used to process and generate human-like text — they make predictions based on the input provided to them and the output is human-like in nature. We see this with leading tools such as ChatGPT, which already boasts over 180 million users with more than 1.6 billion site visits in January 2024 alone.

The LLM Top 10 project produced the checklist to help cybersecurity leaders and practitioners keep pace with the rapidly evolving space and protect against risks associated with rapid insecure AI adoption and use, particularly from generative AI and LLM offerings.

It can aid practitioners in identifying key threats and ensuring the organization has fundamental security controls in place to help secure and enable the business as it matures in its approach of adopting generative AI and LLMs tools, services, and products.

The topic of AI can be incredibly vast, so the checklist is aimed at helping leaders quickly identify some of the key risks associated with generative AI and LLMs and equip them with associated mitigations and key considerations to limit risk to their respective organizations. The group also stresses that the checklist isn’t exhaustive and will continue to evolve as the use of generative AI, LLMs and the tools themselves also evolve and mature.

Checklist threat categories, strategies, and deployment types

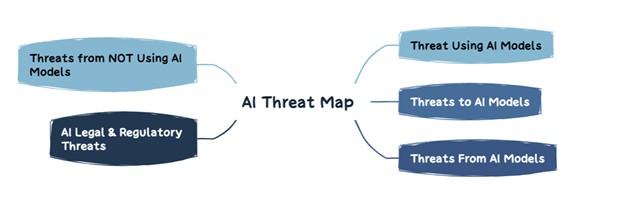

There are various LLM Threat Categories, which are captured in the image below:

OWASP LLM AI Cybersecurity & Governance Checklist

Source: OWASP LLM AI Cybersecurity & Governance Checklist

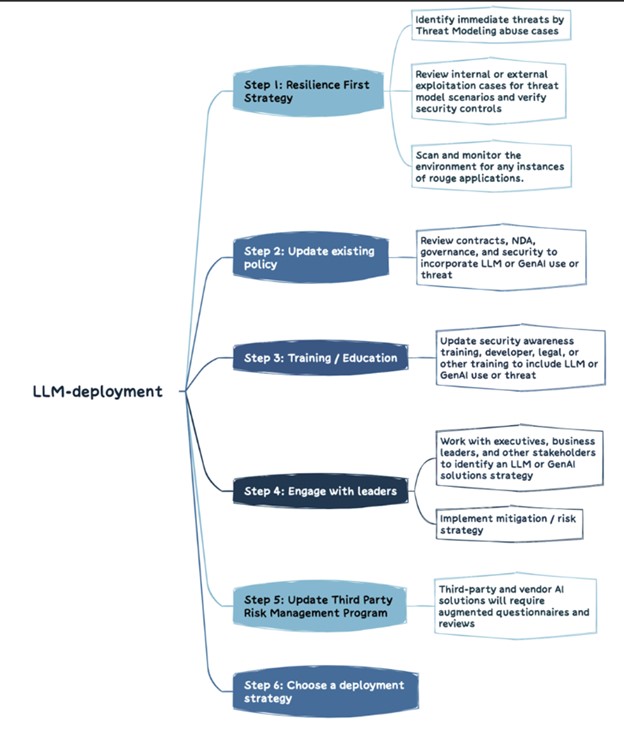

Organizations need to determine their LLM strategy. This is essentially how organizations will handle the unique risks associated with generative AI and LLMs and implement organizational governance and security controls to mitigate these risks. The publication lays out a six-step practical approach for organizations to develop their LLM strategy, as depicted below:

Source: OWASP LLM AI Cybersecurity & Governance Checklist

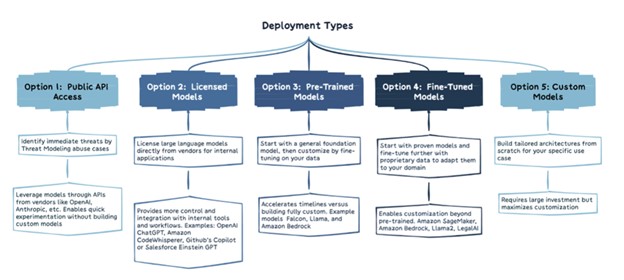

There are also various LLM deployment types, each with its own unique considerations, as captured below They range from public AP access and licensed models all the way to custom models:

Source: OWASP LLM AI Cybersecurity & Governance Checklist

OWASP’s main checklist areas of focus

Here is a walkthrough of the checklist areas identified and some key takeaways from each.

Adversarial risk

This area involves both competitors and attackers and is focused on not just the attack landscape but the business landscape. This includes understanding how competitors are using AI to drive business outcomes as well as updating internal processes and policies such as incident response plans (IRP) to account for generative AI attacks and incidents.

Threat modeling

Threat modeling is a security technique that continues to gain increased traction in the broader push for secure-by-design systems, being advocated by CISA and others. This involves thinking through how attackers can use LLMs and generative AI to accelerate exploitation, a business’s ability to detect malicious LLM use and examining whether the organization can safeguard connections to LLM and generative AI platforms from internal systems and environments.

AI asset inventory

The adage “you can’t protect what you don’t know you have” applies in the world of generative AI and LLMs as well. This area of the checklist involves having an AI asset inventory for both internally developed solutions as well as external tools and platforms.

It’s critical to understand not only the tools and services being used by an organization but also ownership, in terms of who will be accountable for their use. There are also recommendations to include AI components in SBOMs and catalog AI data sources and their respective sensitivity.

In addition to having an inventory of existing tools in use, there also should be a process to onboard and offboard future tools and services from the organizational inventory securely.

AI security and privacy training

It’s often quipped that “humans are the weakest link,” however that doesn’t need to be the case if an organization properly integrates AI security and privacy training into their generative AI and LLM adoption journey.

This involves helping staff understand existing generative AI/LLM initiatives, as well as the broader technology and how it functions, and key security considerations, such as data leakage. Additionally, it is key to establish a culture of trust and transparency, so that staff feel comfortable sharing what generative AI and LLM tools and services are being used, and how.

A key part of avoiding shadow AI usage will be this trust and transparency within the organization, otherwise, people will continue to use these platforms and simply not bring it to the attention of IT and Security teams for fear of consequences or punishment.

Establish business cases for AI use

This one may be surprising, but much like with the cloud before it, most organizations don’t actually establish coherent strategic business cases for using new innovative technologies, including generative AI and LLM. It is easy to get caught in the hype and feel you need to join the race or get left behind. But without a sound business case, the organization risks poor outcomes, increased risks and opaque goals.

Governance

Without Governance, accountability and clear objectives are nearly impossible. This area of the checklist involves establishing an AI RACI chart for the organization’s AI efforts, documenting and assigning who will be responsible for risks and governance and establishing organizational-wide AI policies and processes.

Legal

While obviously requiring input from legal experts beyond the cyber domain, the legal implications of AI aren’t to be underestimated. They are quickly evolving and could impact the organization financially and reputationally.

This area involves an extensive list of activities, such as product warranties involving AI, AI EULAs, ownership rights for code developed with AI tools, IP risks and contract indemnification provisions just to name a few. To put it succinctly, be sure to engage your legal team or experts to determine the various legal-focused activities the organization should be undertaking as part of their adoption and use of generative AI and LLMs.

Regulatory

To build on the legal discussions, regulations are also rapidly evolving, such as the EU’s AI Act, with others undoubtedly soon to follow. Organizations should be determining their country, state and Government AI compliance requirements, consent around the use of AI for specific purposes such as employee monitoring and clearly understanding how their AI vendors store and delete data as well as regulate its use.

Using or implementing LLM solutions

Using LLM solutions requires specific risk considerations and controls. The checklist calls out items such as access control, training pipeline security, mapping data workflows, and understanding existing or potential vulnerabilities in LLM models and supply chains. Additionally, there is a need to request third-party audits, penetration testing and even code reviews for suppliers, both initially and on an ongoing basis.

Testing, evaluation, verification, and validation (TEVV)

The TEVV process is one specifically recommended by NIST in its AI Framework. This involves establishing continuous testing, evaluation, verification, and validation throughout AI model lifecycles as well as providing executive metrics on AI model functionality, security and reliability.

Model cards and risk cards

To ethically deploy LLMs, the checklist calls for the use of model and risk cards, which can be used to let users understand and trust the AI systems as well as openly addressing potentially negative consequences such as biases and privacy.

These cards can include items such as model details, architecture, training data methodologies, and performance metrics. There is also an emphasis on accounting for responsible AI considerations and concerns around fairness and transparency.

RAG: LLM optimizations

Retrieval-augmented generation (RAG) is a way to optimize the capabilities of LLMs when it comes to retrieving relevant data from specific sources. It is a part of optimizing pre-trained models or re-training existing models on new data to improve performance. The checklist recommended implementing RAG to maximize the value and effectiveness of LLMs for organizational purposes.

AI red teaming

Lastly, the checklist calls out the use of AI red teaming, which is emulating adversarial attacks of AI systems to identify vulnerabilities and validate existing controls and defenses. It does emphasize that red teaming alone isn’t a comprehensive solution or approach to securing generative AI and LLMs but should be part of a comprehensive approach to secure generative AI and LLM adoption.

That said, it is worth noting that organizations need to clearly understand the requirements and ability to red team services and systems of external generative AI and LLM vendors to avoid violating policies or even find themselves in legal trouble as well.